June 18, 2025

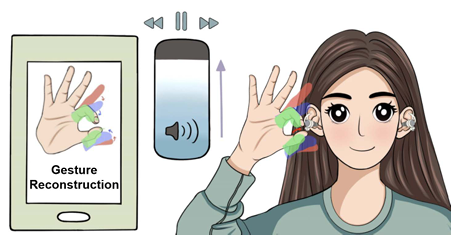

Imagine adjusting your earphones’ volume with no buttons or screens, but a simple wave of your hand. Yongjie Yang, a PhD student in computer science at the School of Computing and Information (SCI), made this futuristic vision a reality.

Yang is the student of Longfei Shangguan, an Assistant Professor with SCI’s Department of Computer Science. Together, they developed a gesture-control system that lets users adjust volume by making a knob-turning motion a few inches from their head.

The idea sparked when Yang was on a long-haul flight and he noticed he could hear the movie playing from the passenger in the next row, despite both wearing earbuds. He thought, “If sound escapes so easily from the earbuds, could we harness that leak?”

That question led to the creation of LeakyFeeder. The team – including Yang, Shangguan, Pitt postdocs Tao Chen and Zhenlin An, Shirui Cao from the University of Massachusetts Amherst, and Xiaoran Fan from Google – designed a system that repurposes those escaping sound waves as a Sound Navigation and Ranging (SONAR) System. By emitting ultrasonic signals and detecting how they bounce off a user’s hand, the earbuds can interpret gestures and translate them into actions like turning the volume up or down.

“One of the hardest parts of this project was reconstructing 3D hand motion from very limited signals. Most systems rely on multiple sensors or cameras to estimate hand position, but we only have a single microphone and a single speaker for the hardware limitation,” explained Yang.

To solve this problem, the team implemented an artificial intelligence (AI) system that, in a sense, ‘fills in the gaps’ of human motion. It uses a Transformer model trained on thousands of hand movements to predict how a gesture unfolds over time, even when only fragments of the motion are detectable.

This advancement in gesture technology is a very forward-looking innovation, and points to the ways in which our society is moving from touchscreens to more natural spatial technology. As a young researcher in the field, Yang is helping forge a path toward a cost-effective, simplified approach to AI-driven gesture control – inspiring others by showing that advanced technology doesn’t always require complex hardware or resources. In the future, this work could empower others in the field to use AI models to enhance hands-free interaction, making our technology more intuitive, accessible, and innovative.

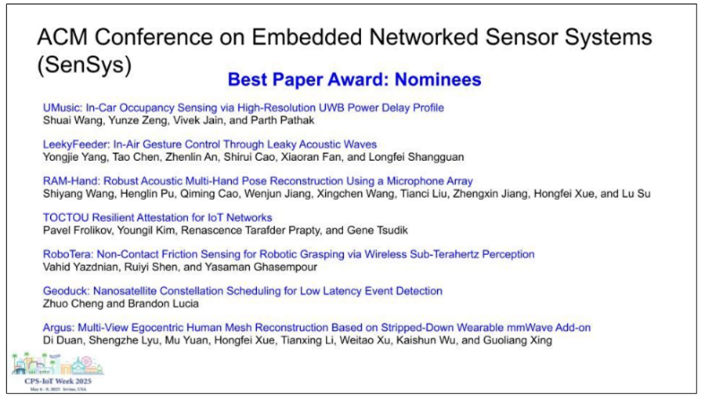

Their research, reported in the article “LeakyFeeder: In-Air Gesture Control Through Leaky Acoustic Waves”, was nominated for the Best Paper at this year’s ACM Conference on Embedded Networked Sensor Systems (ACM SenSys). Out of hundreds of submissions and an acceptance rate of around 20%, being one of just seven Best Paper nominees is an outstanding accomplishment and a strong reflection of the innovation coming out of SCI.

Their research, reported in the article “LeakyFeeder: In-Air Gesture Control Through Leaky Acoustic Waves”, was nominated for the Best Paper at this year’s ACM Conference on Embedded Networked Sensor Systems (ACM SenSys). Out of hundreds of submissions and an acceptance rate of around 20%, being one of just seven Best Paper nominees is an outstanding accomplishment and a strong reflection of the innovation coming out of SCI.

“Being nominated for Best Paper at ACM SenSys 2025 was incredibly encouraging. This work was truly a team effort, and I’m especially grateful to our collaborators from UMass and Google,” Yang reflected.

Congratulations to Yongjie Yang, Assistant Professor Longfei Shangguan, and their collaborators on the nomination in ACM SenSys ‘25! Read more about the event.